Our Areas of Focus

We are transforming the understanding of the human brain and its complex processes - from birth through to old age - so we can better care for and nurture and maintain its cognitive performance as it develops, and understand how it combats stress, diseases, and disorders as we age.

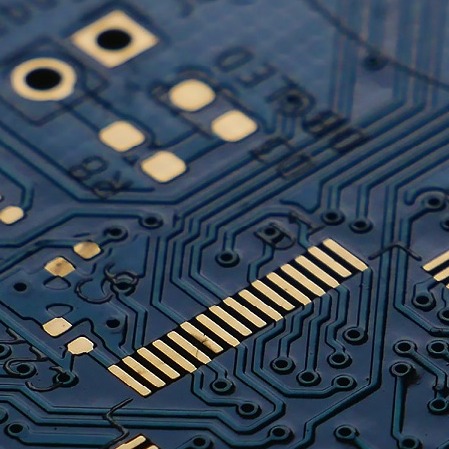

Using traditional and neuromorphic sensor technology, artificial intelligence (AI) and machine learning (ML), we are shaping how sectors such as manufacturing, health, and defence capture, analyse and visualise data to improve decision making.

We combine our deep understanding of the human brain and human behaviour with systems design and engineering to reduce the complexity of information capture and processing in environments that can change abruptly, where every second counts.

Comprehensive knowledge of early brain development is crucial to establishing “best-practice” methods for optimal child development and also pivotal in the diagnosis, assessment and mitigation of diseases and disorders as we progress through life.

Arts permeate every aspect of human existence. We bring together musicians, performers, composers, psychologists, neuroscientists, linguists, and engineers to measure how art impacts the brain at a neurological level and why it has such wide-ranging benefits.

From the moment we are born our ability to communicate develops and adapts based on our physical abilities, social settings, cultural contexts, and brain function. We are transforming our understanding of how humans produce and perceive communication across the lifespan.