About Astrosite

You can search for courses, events, people, and anything else.

Greg Cohen vividly recalls the moment that changed the course of his career. He and his colleagues were star-gazing through a telescope rigged with a prototype camera that they were working on in his engineering lab at Western Sydney University’s MARCS Institute for Brain, Behaviour and Development. Suddenly, the camera picked up a mysterious bright object streaking across the sky. “It was spectacular,” says Cohen.

The team realised that Cohen’s camera had captured a satellite speeding across the sky in real-time. Standard cameras — even the sophisticated models used by professional astronomers — can’t perform this feat as satellites are simply too fast to register as they pass by. But rather than snapping images in the conventional way, Cohen’s camera was designed to mimic the way the eye works.

Now, Cohen in collaboration with one of his former PhD supervisors, Professor Andre van Schaik and the team at the newly formed International Centre for Neuromorphic Systems are developing their biologically-inspired imaging systems to track the space junk zooming around, in danger of crashing into Earth. The technology could also be used to spot asteroids on a collision course with our planet, and for navigation by spacecraft, drones, and submersibles.

Cohen concedes that his path into space-tracking was a bit unusual. Born in South Africa, he originally applied to the University of Cape Town to study medicine, but was instead accepted into an electrical engineering course.

After graduating, he worked for a while as an engineer, but, feeling restless, decided to study finance and tried working in share portfolio management for a while. “It was a disaster,” laughs Cohen. Still dissatisfied, six years ago he embarked on a PhD, jointly at Western and at the University Pierre and Marie Curie in Paris, in the intriguingly-named field of neuromorphic engineering, which involves developing biologically-inspired electronics.

It was during his PhD that Cohen started working on cameras being designed by his lab-mates. Dubbed “silicon retinas”, these cameras borrow the abilities of the human eye. Normal cameras, Cohen explains, can be relatively inefficient for tracking the motion of specific objects, for instance, because they are built to capture everything in a scene. The hope is that there will be enough visual information recorded in the photograph for our eyes to figure out what was going on. “Biological eyes don’t work like that — they don’t see what they don’t need to see,” says Cohen. Rather, animal eyes are highly refined and have adapted to see only things pertinent to their survival and to the functions they need to perform. “Biology is much more careful about extracting only the information that it needs,” says Cohen.

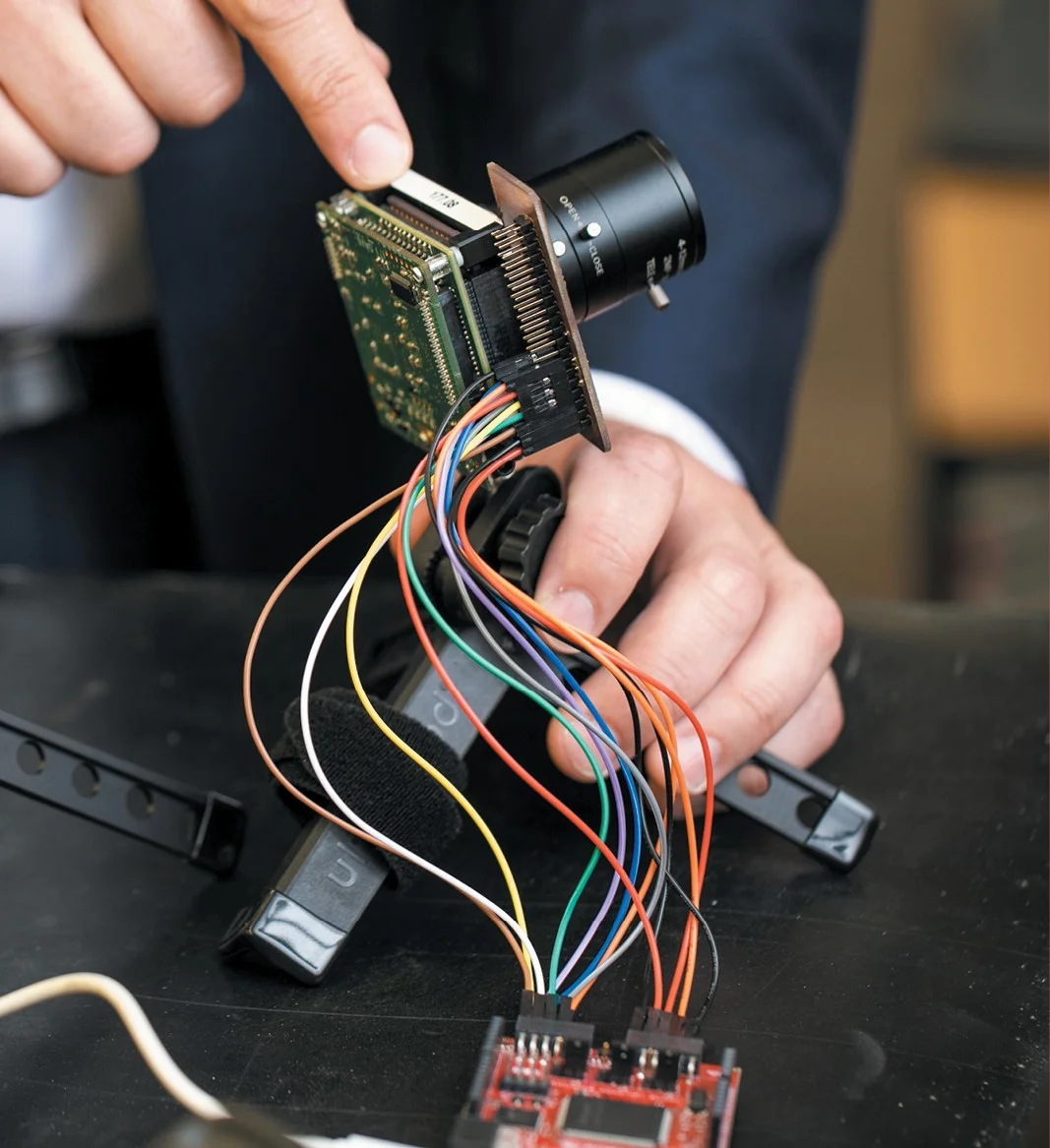

With that alternative paradigm for image capturing in mind, Cohen, with his colleague, Saeed Afshar, has been working with cameras that are made up of a grid of independent pixels that each work like photo-receptors in the eye. Rather than photographing static images, the pixels only fire when they see movement. It’s this quality that makes them ideal for tracking fast-moving satellites in real time, which, as Cohen explains, is a crucial application, given our reliance on satellites for communications and GPS. When old satellites are eventually abandoned and left to float in space, they become potentially dangerous junk.

“We’ve been launching things into space for just over 60 years now,” Cohen notes. “It’s getting crowded up there.” That means that the chance of collisions between satellites is high — and when satellites crash, they break apart. The International Space Station is regularly struck by such debris, moving at bullet speed, with larger objects able to puncture a hole in the station’s side.

The doomsday scenario — outlined in the 1970s and known as the Kessler syndrome — is that a ring of high-speed junk will build up around Earth, making it too dangerous for us to launch craft into space, pinning us to the planet. Such a ring of debris would also disrupt global communications, wireless internet, military intelligence and a host of other applications that rely on satellites to function. Though we’re a long way from that, the issue of space safety is taken seriously, notes wing commander Steven Henry, the deputy director of space surveillance with the Royal Australian Air Force, which is supporting Cohen’s project. “There are more and more satellites, so the debris has increased,” says Henry. “We can’t go on without paying attention.”

For Henry, a major attraction of the silicon-retina camera is that it doesn’t just pick out satellites at night, but also during the day — something even the best optical telescope systems cannot do. The camera can do this because its individual pixels do not image background daylight superfluously, so they do not become saturated.

Today, the gold-standard for tracking satellites is radar, which works in the day and at night, but is power-hungry and expensive. By contrast, because the pixels only fire when necessary, Cohen’s camera drains very little power, which is another advantage. “That’s ideal for putting into orbit or at a remote sensing site,” says Henry. He hopes that they may be able to use the technology to start to build a critical network of sensors.

Need to know

- Biologically-inspired cameras can track speeding satellites by mimicking how an eye works

- Tracking and managing debris in space is critical in ensuring both future space travel and communications on Earth

Most exciting for Cohen is that these benefits were found using general prototypes of the camera — which were originally built by the first generation of neuromorphic researchers, and are now produced by companies in France and in Switzerland, to help investigate how the eye works, rather than specifically for space applications. His WSU team is now tailoring the designs and the processing algorithms for space tracking to see how much better they will perform.

Cohen is also in talks with NASA and ESA scientists about using the cameras for deep space navigation. They could potentially be placed on orbital stations to look for early signs of asteroids veering dangerously close to Earth. Cohen also hopes that the cameras could one day find their way on to autonomous vehicles and drones, and be used by submersibles for underwater searches.

The take-home message, according to Cohen, which inspired him to come up with these applications for silicon retinas in the first place, is that engineers still have much to learn from biology.

“I can have a sandwich for breakfast and then do tasks that high-powered computers and robots can’t do — and I do them faster, and far more reliably, using far less power,” says Cohen. “Clearly biology is doing things in a completely different, but far more effective and efficient way, than today’s electronics.”

Meet the Academic | Associate Professor Greg Cohen

Associate Professor Greg Cohen is Program Leader in Neuromorphic Systems – Algorithms and based at the MARCS Institute’s International Centre for Neuromorphic Systems (ICNS). Associate Professor Cohen’s research seeks to investigate and explore the use of neuromorphic principles and spike-based computation as an adaptive, scalable and low-power control system for a high-speed application. Associate Professor Cohen is also project research lead for the revolutionary and world-first approach Astrosite™, a mobile space situational awareness (SSA) module.

Related Articles

Credit

© Michael Amendolia; © Madmaxer/Getty Images; © releon8211/ Getty Images © Michael Amendolia

Future-Makers is published for Western Sydney University by Nature Research Custom Media, part of Springer Nature.